K8S安装(单master v1.26.2)

k8s集群的架构

1、单master和多node

2、多master和多node(高可用架构)

一、环境规划

| 名称 | 操作系统 | 配置 | IP地址 |

|---|---|---|---|

| k8s-master | Rockylinux9.1 | 4G/4C/100G | 192.168.3.41 |

| k8s-slave1 | Rockylinux9.1 | 4G/4C/100G | 192.168.3.42 |

| k8s-slave2 | Rockylinux9.1 | 4G/4C/100G | 192.168.3.43 |

二、角色规划

| 名称 | 部署组件 |

|---|---|

| master | etcd, kube-apiserver, kube-controller-manager, kubectl, kubeadm, kubelet, kube-proxy, Calico |

| slave | kubectl, kubelet, kube-proxy, Calico |

三、组件版本

| 组件 | 版本 | 说明 |

|---|---|---|

| Rockylinux | 9.1 | |

| etcd | 3.5.6-0 | 使用容器方式部署,默认数据挂载到本地路径 |

| coredns | v1.9.3 | |

| kubeadm | v1.26.2 | 1.24以上不支持shim |

| kubectl | v1.26.2 | |

| kubelet | v1.26.2 | |

| Calico | v3.25 | https://docs.tigera.io/ |

| cri-docker | v0.3.1 | https://github.com/Mirantis/cri-dockerd/ |

| kubernetes-dashboard | v2.7.0 | https://github.com/kubernetes/dashboard |

四、事先准备

4.1 修改hostname

hostname必须只能包含小写字母、数字、","、"-",且开头结尾必须是小写字母或数字

# 设置master的主机名

hostnamectl set-hostname k8s-master

# 设置slave1的主机名

hostnamectl set-hostname k8s-slave1

# 设置slave2的主机名

hostnamectl set-hostname k8s-slave2

4.2 设置hosts解析

cat >>/etc/hosts<<EOF

192.168.3.41 k8s-master

192.168.3.42 k8s-slave1

192.168.3.43 k8s-slave2

EOF

4.3 确认端口是否开放

如果节点间无安全组限制(内网机器间可以任意访问),可以忽略,否则,至少保证如下端口可通:

k8s-master节点:

TCP:6443,2379,2380,60080,60081

UDP协议端口全部打开

k8s-slave节点:

UDP协议端口全部打开

4.4 设置iptables

iptables -P FORWARD ACCEPT

4.5 关闭swap

swapoff -a

# 防止开机自动挂载swap分区

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

4.6 关闭firewalld

systemctl disable firewalld && systemctl stop firewalld

4.7 关闭selinux

sed -ri 's#(SELINUX=).*#\1disabled#' /etc/selinux/config

setenforce 0

4.8 修改内核参数

4.8.1 创建内核参数配置文件

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward=1

vm.max_map_count=262144

EOF

4.8.2 加载内核模块

modprobe br_netfilter

4.8.3 生效参数

sysctl -p /etc/sysctl.d/k8s.conf

4.9 设置yum源

4.9.1 更改yum源为上海交通大学

sed -e 's|^mirrorlist=|#mirrorlist=|g' \

-e 's|^#baseurl=http://dl.rockylinux.org/$contentdir|baseurl=https://mirrors.sjtug.sjtu.edu.cn/rocky|g' \

-i.bak \

/etc/yum.repos.d/rocky-*.repo

4.9.2 生成缓存

dnf makecache

4.9.3 设置docker源

curl -o /etc/yum.repos.d/docker-ce.repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

4.9.4 设置k8s安装源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

4.10 安装docker

4.10.1 安装最新版本的docker

yum -y install docker-ce

4.10.2 配置docker加速

mkdir -p /etc/docker

cat <<EOF >/etc/docker/daemon.json

{

"registry-mirrors" : [

"https://doxo3j7y.mirror.aliyuncs.com"

]

}

EOF

4.10.3 启动docker

systemctl enable docker && systemctl start docker

五、部署kubernetes

5.1 安装cri-docker

Kubernetes自v1.24版本后移除了对docker-shim的支持,而Docker Engine默认又不支持CRI规范,因而二者将无法直接完成整合。为此,Mirantis和Docker联合创建了cri-dockerd项目,用于为Docker Engine提供一个能够支持到CRI规范的垫片,从而能够让Kubernetes基于CRI控制Docker 。

5.1.1 安装libcgroup

如果没有报错可以不用安装

rpm -ivh https://vault.centos.org/centos/8/BaseOS/x86_64/os/Packages/libcgroup-0.41-19.el8.x86_64.rpm

5.1.2 下载cri-dockerd

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.1/cri-dockerd-0.3.1-3.el8.x86_64.rpm

5.1.3 安装cri-dockerd

dnf install -y cri-dockerd-0.3.1-3.el8.x86_64.rpm

5.1.4 修改cri-docker服务文件

vi /usr/lib/systemd/system/cri-docker.service

把原来的注释一下添加如下

#ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd://

ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd:// --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9

5.1.5 重载服务文件

systemctl daemon-reload

5.1.6 设置开机自启及启动cri-docker

systemctl enable cri-docker && systemctl start cri-docker

5.2 安装kubeadm, kubelet 和 kubectl

5.2.1 使用yum安装

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

5.2.2 查看kubeadm版本

kubeadm version

5.2.3 设置kubelet开机启动

systemctl enable kubelet

六、master节点配置

6.1 安装初始化

kubeadm init --apiserver-advertise-address=192.168.3.41 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.26.1 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16 --cri-socket=unix:///var/run/cri-dockerd.sock --ignore-preflight-errors=all

出现以下提示,即表示安装初始化成功

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.3.41:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:ce94e7cc23562df6c1100102dfc867cf9455f0e466d1c056a2e91372cd8e91cb

6.2 配置kubectl客户端认证

根据安装提示信息提示,需要执行以下命令。

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

**⚠️注意:**此时使用 kubectl get nodes查看节点应该处于notReady状态,因为还未配置网络插件

若执行初始化过程中出错,根据错误信息调整后,执行kubeadm reset后再次执行init操作即可

6.3 设置master节点是否可调度(可选)

默认部署成功后,master节点无法调度业务pod,如需设置master节点也可以参与pod的调度,需执行:

kubectl taint node k8s-master node-role.kubernetes.io/master:NoSchedule-

查看taint状态:

kubectl describe node k8s-master |grep Taint

Taints: <none>

如不想master进行调度,可执行以下命令

kubectl taint node k8s-master node-role.kubernetes.io/master:NoSchedule

查看taint状态:

kubectl describe node k8s-master |grep Taint

Taints: node-role.kubernetes.io/master:NoSchedule

七、slave节点配置

7.1 安装说明

需要完成第四节及第五节所有的安装项目

7.2 添加slave节点到集群

在每台slave节点,执行如下命令,该命令是在kubeadm init成功后提示信息中打印出来的,需要替换成实际init后打印出的命令。

kubeadm join 192.168.3.41:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:ce94e7cc23562df6c1100102dfc867cf9455f0e466d1c056a2e91372cd8e91cb \

--cri-socket=unix:///var/run/cri-dockerd.sock

⚠️注意:

此处命令是6.1安装初始化完毕后生成的命令。

默认token有效期为24小时,当过期之后,该token就不可用了。这时就需要重新创建token,可以直接使用命令快捷生成:

kubeadm token create --print-join-command

安装成功后显示以下提示:

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

7.3 查看slave节点是否添加成功

kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master NotReady control-plane 55m v1.26.2 192.168.3.41 <none> Rocky Linux 9.1 (Blue Onyx) 5.14.0-162.6.1.el9_1.x86_64 docker://23.0.1

k8s-slave1 NotReady <none> 41m v1.26.2 192.168.3.42 <none> Rocky Linux 9.1 (Blue Onyx) 5.14.0-162.6.1.el9_1.x86_64 docker://23.0.1

k8s-slave2 NotReady <none> 4s v1.26.2 192.168.3.43 <none> Rocky Linux 9.1 (Blue Onyx) 5.14.0-162.6.1.el9_1.x86_64 docker://23.0.1

八、部署容器网络(CNI)

Calico是一个纯三层的数据中心网络方案,是目前Kubernetes主流的网络方案。

8.1 下载YAML

wget https://docs.projectcalico.org/v3.25/manifests/calico.yaml

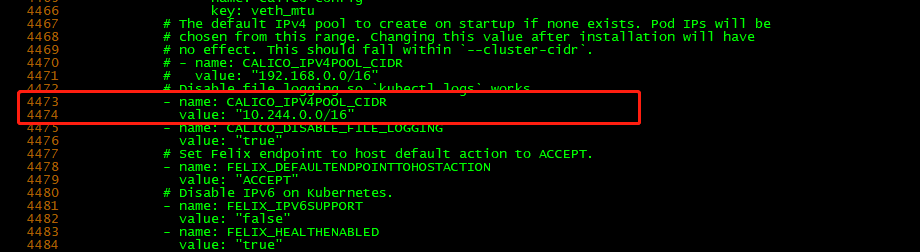

8.2 修改定义Pod网络

下载完后还需要修改里面定义Pod网络(CALICO_IPV4POOL_CIDR),与前面kubeadm init的 --pod-network-cidr指定的一样。

vi calico.yml

#在4473行处添加如下配置

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

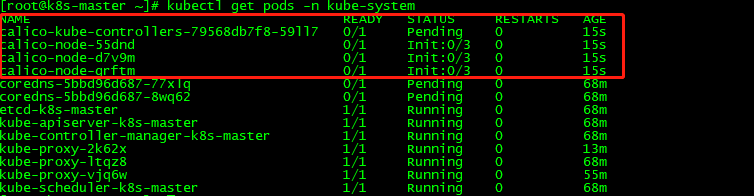

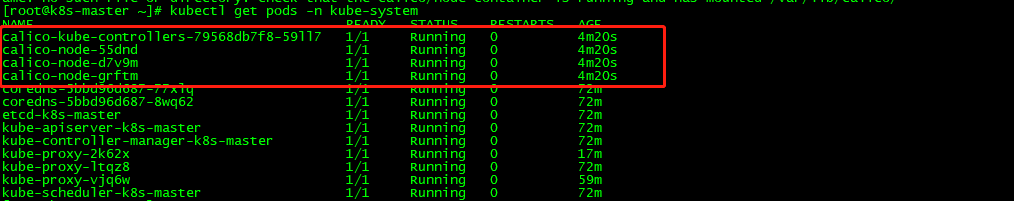

8.3 部署calico

kubectl apply -f calico.yaml

kubectl get pods -n kube-system

等Calico Pod都Running,节点也会准备就绪。

九、集群验证

9.1 验证集群是否全部Ready

kubectl get nodes #观察集群节点是否全部Ready

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 77m v1.26.2

k8s-slave1 Ready <none> 64m v1.26.2

k8s-slave2 Ready <none> 22m v1.26.2

9.2 创建测试服务

9.2.1 创建nginx pod

kubectl run test-nginx --image=nginx:alpine

9.2.2 查看Pod是否成功

kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-nginx 1/1 Running 0 71s 10.244.92.1 k8s-slave2 <none> <none>

9.2.3 测试nginx服务是否正常运行

curl 10.244.92.1

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

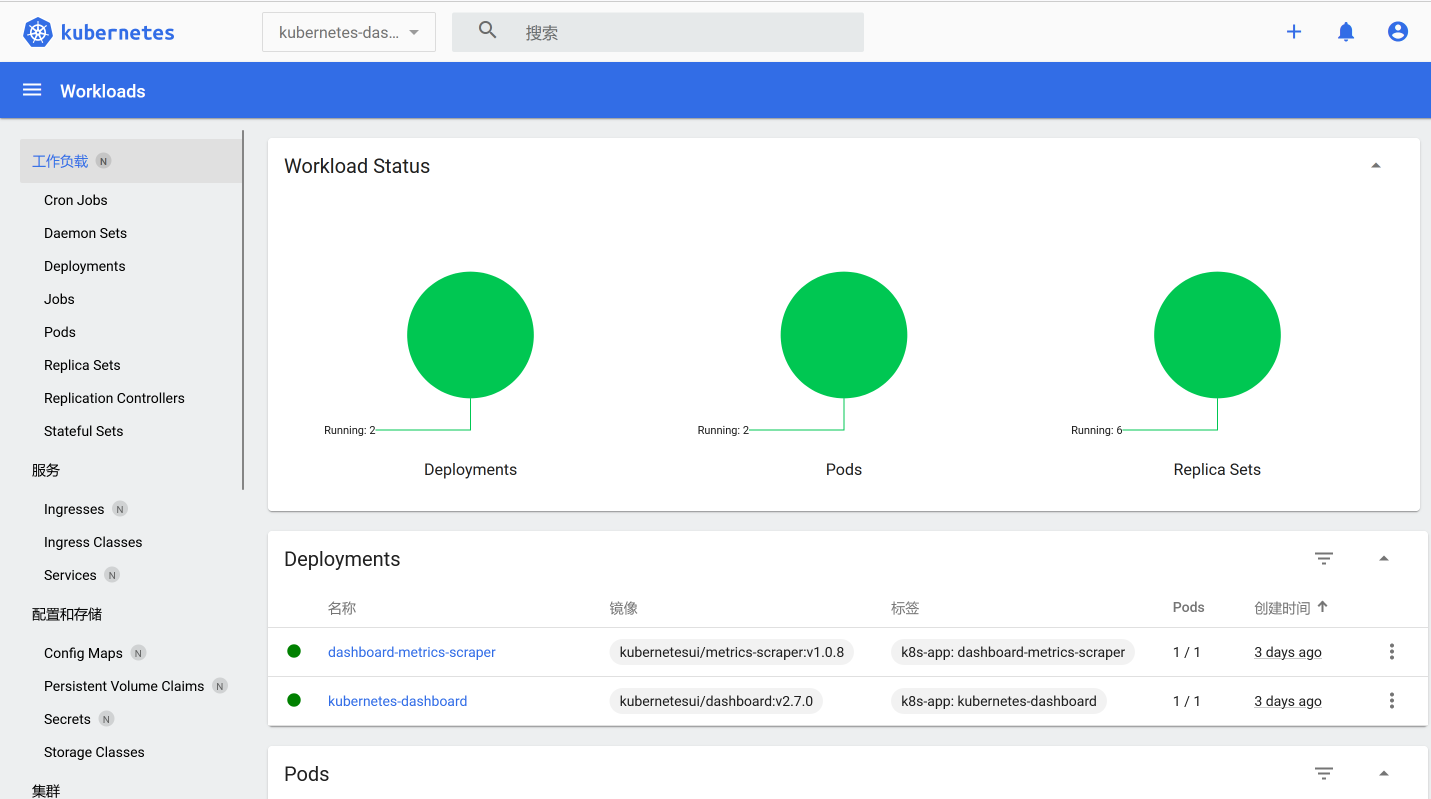

十、部署Dashboard

Dashboard是官方提供的一个UI,可用于基本管理K8s资源。

项目地址:https://github.com/kubernetes/dashboard

10.1 下载yaml文件

# 下载yaml文件

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

10.2 修改yaml文件

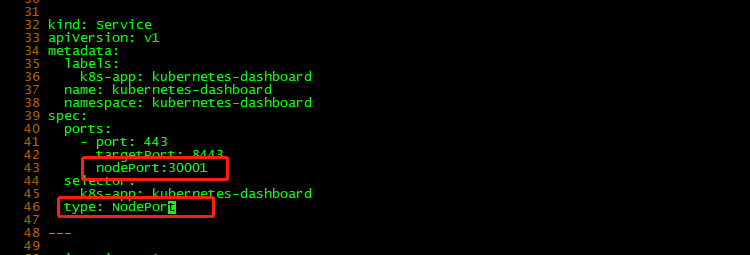

修改Service为NodePort类型 ,并指定访问端口。

vi recommended.yaml

...

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001 #这里注意大小写格式

selector:

k8s-app: kubernetes-dashboard

type: NodePort #这里注意大小写格式

...

---

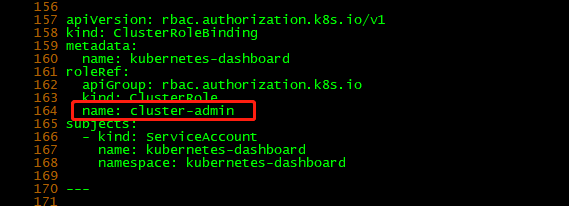

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

# 默认绑定的 kubernetes-dashboard 权限太少了,这里换成权限比较高的 cluster-admin

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

10.3 创建Dashboard

kubectl apply -f recommended.yaml

10.4 查看运行状态

kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-6d9cd57f74-7qn6j 1/1 Running 0 37s

kubernetes-dashboard-6788559d58-d9qdt 1/1 Running 0 37s

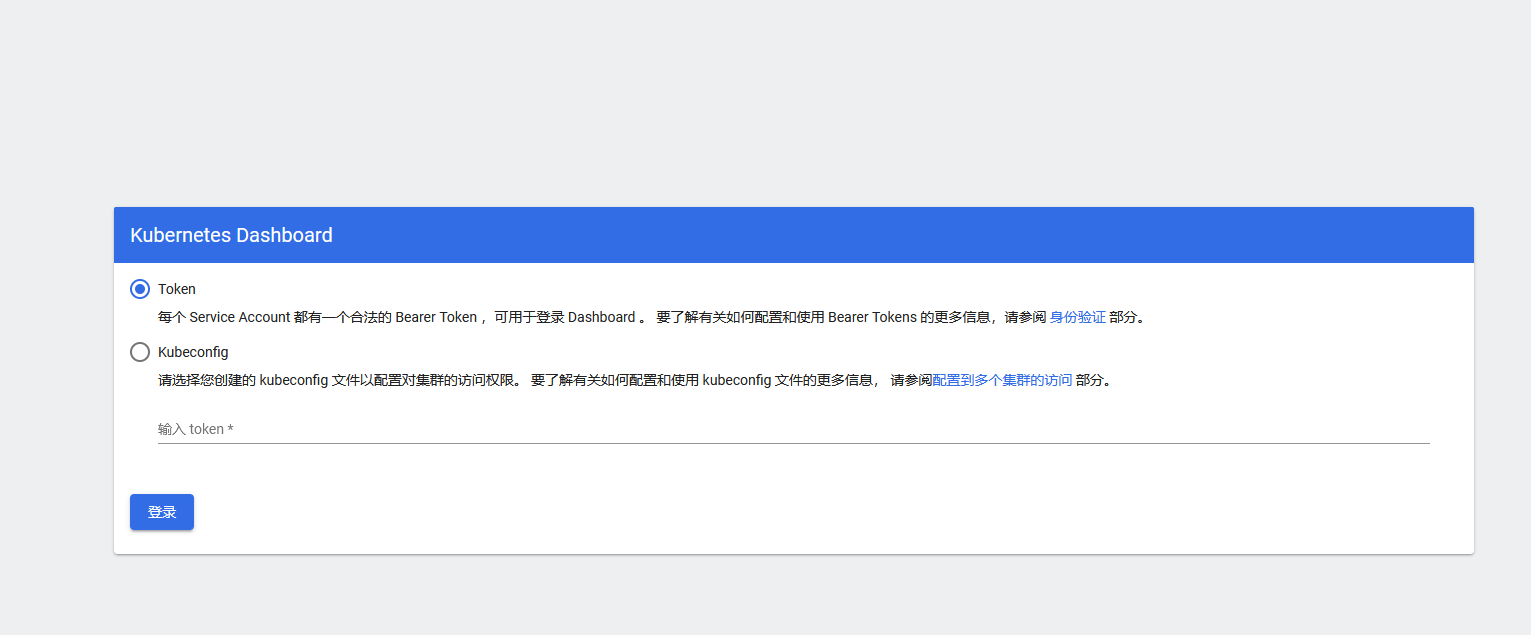

10.5 访问Dashboard

如果无法访问使用如下方式查看kubernetes-dashboard状态是否为running

先用

kubectl get pods --all-namespaces -owide查看问题pod,然后用kubectl describe pod pod_name -n kube-system来查看日志,一般情况下,我们都是可以通过这个方式来获取到报错原因

10.5 授权访问

创建service account并绑定默认cluster-admin管理员集群角色:

# 创建用户

kubectl create serviceaccount dashboard-admin -n kubernetes-dashboard

# 用户授权

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:dashboard-admin

# 获取用户Token

kubectl create token dashboard-admin -n kubernetes-dashboard